Do you have a spreadsheet, JSON, or plain text file filled with data that you haven’t come to terms with? If you’re using Mac OSX, you already have a powerful tool at your disposal for exploring and cleaning a text-based data set. By using terminal commands, you can get a feel for an otherwise unwieldy file or set of files, searching and sorting in a way that is fast, flexible, and (perhaps most importantly) reproducible.

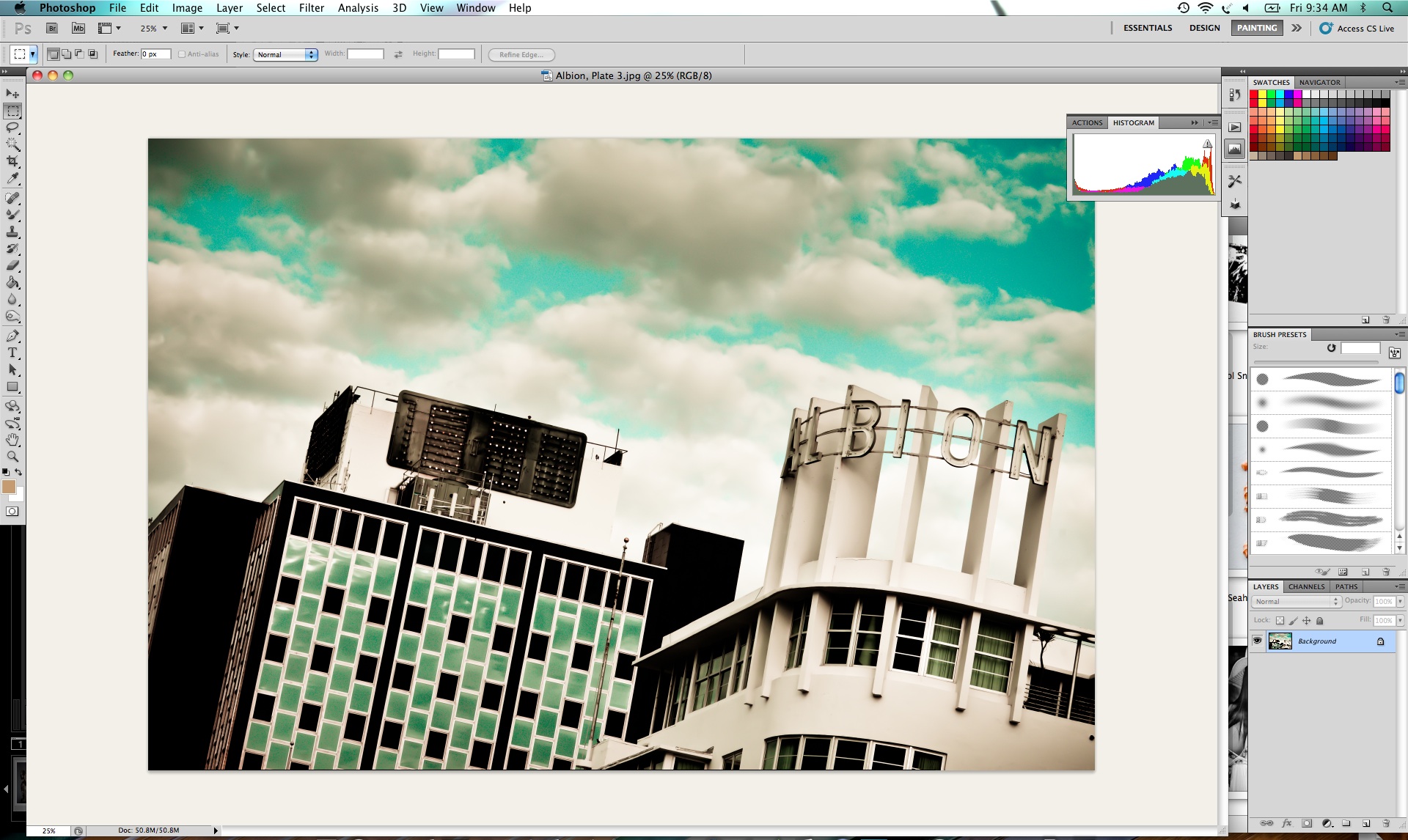

On OSX, you can open your terminal (also known as the bash or UNIX shell) by clicking the Finder button and typing "terminal." You’ll see a prompt with your computer name and a dollar sign:

psmyth $For this article, I’ll be using the "works" version of the Open Library data dump, which contains information on over fifteen million books in a file that is just over 8 GB. If your data is in a non-text format such as .xls, you should export it to a text-based format such as .csv before continuing.

To begin, navigate to the folder where you’ve placed your data. (If you’re not sure how to navigate to a folder on the command line, check out the (very short) chapters on navigation in Learn Bash the Hard Way.)

$ cd projects/data

~/projects/data $ pwd

/home/patrick/projects/data

~/projects/data $ ls

data.txtNote that file extensions for plain text formats such as .csv, .txt, .md, or .json are mostly just conventions and are ignored by most command-line utilities, so don’t worry if your file isn’t a .txt. Let’s start by looking at a snippet of the file to get a sense of how our data is structured:

~/projects/data $ head data.txt

/type/work /works/OL10001509W 3 2010-04-28T06:54:19.472104 {"title": "A la recherche de l'\u00e2me de mon p\u00e8re", "created": {"type": "/type/datetime", "value": "2009-12-11T01:57:29.804644"}, "covers": [3143247], "last_modified": {"type": "/type/datetime", "value": "2010-04-28T06:54:19.472104"}, "latest_revision": 3, "key": "/works/OL10001509W", "authors": [{"type": "/type/author_role", "author": {"key": "/authors/OL3966680A"}}], "type": {"key": "/type/work"}, "revision": 3}

...The "head" command prints out the first screen of information about a given file, perfect for seeing how data is structured in general terms. Looking at this output, we see that the file is in tab separated format, and that the last column contains data in JSON format. Note that using a command like "head" is fast and reliable, since many text editors and spreadsheet utilities experience slowdowns or odd behaviors when working with very large files. To page through more of the data or to see the last section of the file, use:

$ less data.txtor

$ tail data.txtAnother useful place to start when examining a file is finding out how many lines or entries it contains. To find out how many books are contained in data.txt, run

~/projects/data $ cat data.txt | wc -l

15973949This command may take a few seconds to run in a very large file, and will return the number of lines in the file. In this case, our data.txt contains 15,973,949 books. That’s a lot of books!

Note that the | (or "pipe") symbol allows one bash utility to pass its output to another. The "cat" command returns all the lines in a file, and the "wc -l" command counts the lines given it by the "cat" command. Pipes are a flexible way to chain utilities together, and are great for sorting and presenting data.

In my case, I’m only interested in books in (or about) the science fiction genre. Let’s redirect all the lines that contain the words "science fiction" into a new file:

~/projects/data $ cat data.txt | grep -i "science fiction" > sci_fi_data.txt

~/projects/data $ ls

data.txt sci_fi_data.txtIn the command above, the "grep" utility sorts out lines that contain a particular pattern, in this case "science fiction." The -i flag indicates that grep should ignore differences in upper and lower case while searching. The ">" (or "redirect") symbol sends the information to a newly-created file, in this case sci_fi_data.txt.

Let’s see how many science fiction books we have:

~/projects/data $ cat sci_fi_data.txt | wc -l

12784Out of our fifteen million books, 12,784 have "science fiction" in either the title or the subject listing. We can use a similar method to find out how many books by particular authors we have in sci_fi.txt. After looking up the ID of Ursula Le Guin on the Open Library website ("OL31353A"), we see how many of her books are present in our data:

~/projects/data $ cat sci_fi_data.txt | grep -i "OL31353A" | wc -l

28We can also use the command line to begin cleaning our data. First, we can use the "uniq" command to remove duplicate entries:

~/projects/data $ uniq sci_fi_data.txt > sci_fi_data_no_duplicates.txtor to sort our entries in alphabetical order:

~/projects/data $ sort sci_fi_data.txt > sci_fi_data_alphabetical.txtWe can also use the "grep" command to remove incomplete entries, such as lines with no author information:

~/projects/data $ cat sci_fi_data.txt | grep author > sci_fi_data_cleaned.txtThe above commands can also be combined into one:

~/projects/data $ uniq sci_fi_data.txt | sort | grep authors | grep description | grep subjects > sci_fi_data_cleaned.txtThis command creates a new file based on sci_fi_data.txt that is ordered alphabetically, has duplicates removed, and contains only those entries with descriptions, subject listings, and author information.

If you want to create a quick record of the commands you used to clean your data, run

$ history 200 > history.txtto save your last 200 commands to a text file. However, this will also save mistakes and revisions, and so it may be difficult to use in reproducing your workflow later. Once you’re comfortable with the command line, you may want to look into creating bash scripts to process your data. These can be used later in other projects or shared alongside your data to show the steps you took to reach your end product.