Web Scraping – Social Media

GC Digital Fellows Workshop October 19, 2015

INTRODUCTION

DISCUSSION

TUTORIAL: STATIC SITE

TUTORIAL: RSS FEED

TUTORIAL: USER NETWORKS

TUTORIAL: TWITTER TOPICS

INTRODUCTION

The Internet (broadly defined) has drastically changed modern life and the way that information is transferred. It is only logical that researchers would correspondingly wish to study the data made available through the World Wide Web and Social Media. This tutorial will help you get started collecting data from websites. As with anything, there are more complicated ways to collect this data that give you more flexibility and control. The tools presented here have a very limited range, but are an excellent first step for either collecting the data that you need or as a jumping off point to understand what is happening when scraping pages or sites.

There are really two types of web scraping: Collecting data from a structured website or news feed and collecting network information and content from users. Both types are introduced here.

Discussion

- Collecting data from Social Media

- Data that is dynamic and continues to be added to – put in by user of the site

- Relies on an API to get the information

- Can “crawl” a series of connected users

- Web scraping

- Data that is static on the page – put in by page creator.

- Relies on the structure of the site to get the information.

- Can “crawl” a series of connected webpages

- What kind of research questions can be asked?

- Data – Web Scraping

- People – Network Analysis

- Topics – Social Media Scraping

- Ethical Issues

- Who’s data is it?

- Robots.txt

- A few types

- Web Scraping (pulling information off webpages)

- News Feeds (pulling information off an ongoing feed)

- Social Media Scraping (involves an API)

- Webscraping Tools

- Google Sheets

- Bots

- Python, Scrapy, MongoDB (not covered in this workshop, please come to office hours or the Python Users’ Group)

- Python, BeautifulSoup (also not covered in this workshop, please come to office hours or the Python Users’ Group)

- Python, Tweepy (also not covered in this workshop, please come to office hours or the Python Users’ Group)

- Social Tools

- TAGS https://tags.hawksey.info/get-tags/

- NodeXL http://nodexl.codeplex.com/

- R (not covered in this workshop. Can be used for twitter or Facebook)

- Crawlers

- CURL (also not covered in this workshop, please come to office hours or the Python Users’ Group)

TUTORIALS

TUTORIAL: STATIC SITE

IMPORTHTML GOOGLESHEETS

Scraping the information off a well structured website (i.e., Wikipedia). Static data contributed by the page developer, not time dependent.

Let’s say I am researching global literacy rates, and want to pull in a table from Wikipedia (or any other page with data in tables).

- Open new GoogleSheet

- in cell A1 type: =IMPORTHTML(“https://en.wikipedia.org/wiki/List_of_countries_by_literacy_rate “, “table”, 5)

- “table” means the information is stored in a table,

- “list” means the information is stored in a list.

- “5” means that it’s the 5th table on the page

- Try this now with Human Development Index https://en.wikipedia.org/wiki/List_of_countries_by_Human_Development_Index

- Should look like this.

(can also use IMPORTDATA to pull data from pages that are just tables if using NYCOpenData, GoogleMaps or something similar)

TUTORIAL: RSS FEED

IMPORTFEED, GOOGLESHEETS

Scraping the information off a RSS feed or similar (i.e., NPR, googlenews, etc.). This is essentially a list of data. The information is collected by automatic compilation, time dependent.

Let’s say I want to compare how npr, cnn, and msn write their summaries. For this, I will need to import data from an RSS Feed.

- Open a new GoogleSheet

- in cell A1 type:

- =IMPORTFEED(“http://www.npr.org/rss/rss.php?id=1001”, , TRUE)

- “ “ is a placeholder for the query, which tells it which items to collect (in this case, all of them)

- “TRUE” tells it that I want the column headers

- Try this now with CNN http://rss.cnn.com/rss/cnn_topstories.rss

- Should look like this.

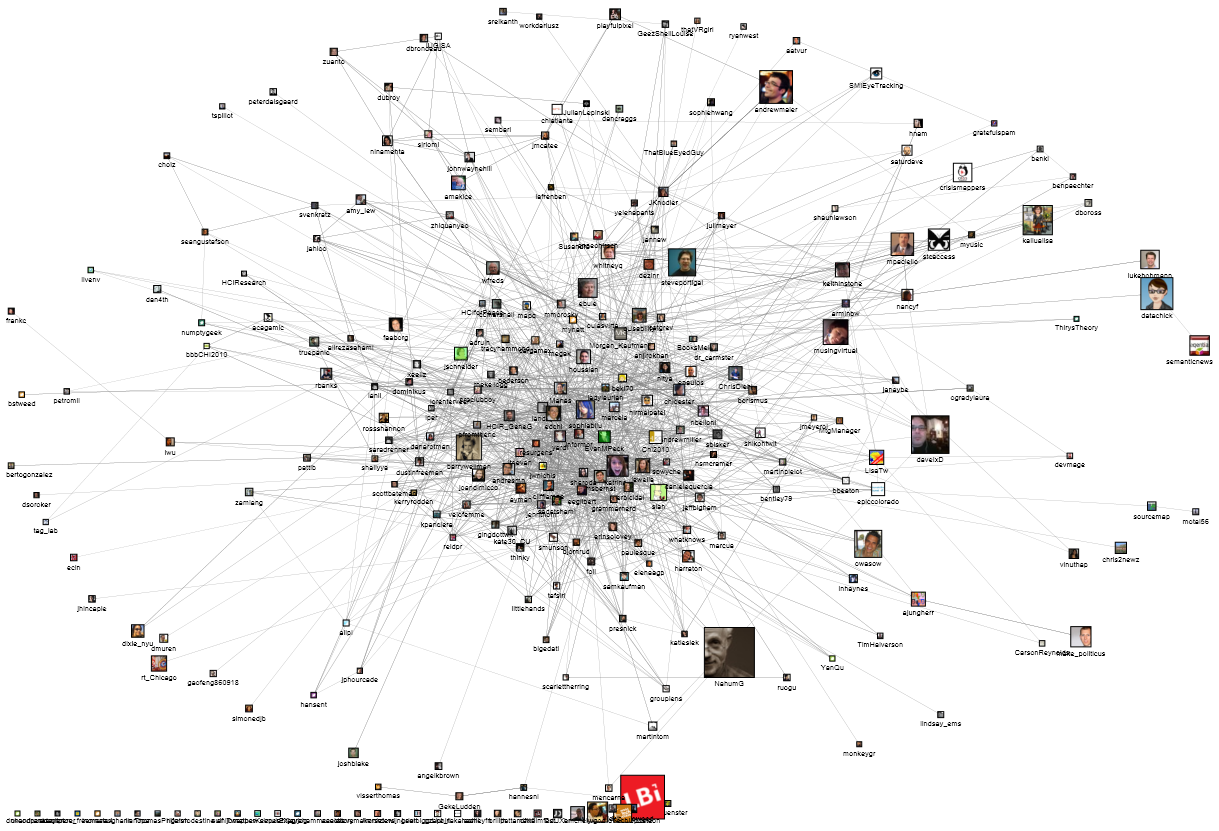

TUTORIAL: USER NETWORKS

NODEXL, EXCEL SPREADSHEET

Collecting Network data with NodeXL. This type of data shows you relationships – how people are connected, and the strength/shape of communities and networks.

- Download NodeXL http://nodexl.codeplex.com/ Only runs on Excel for WINDOWS (can be run on GC computers though 🙂 )

- Open and run it on your computer.

- It may take multiple tries to get it running

- It will show up as a template in the toolbar when you open a new Excel spreadsheet.

- If it does not automatically appear, search for NodeXL in “Search Programs and Files” in the Windows Search Bar (lower left corner), and double click on it from there.

- NodeCL Basic will appear in the toolbar

- Click on Import (upper left hand corner), and authorize it using your own twitter identification (radio button at bottom)>Twitter Search Network (or whatever you want to search, though some are only available for “pro”)

- You must be logged in to twitter for this to work (another window will open). It will be using the data from your account

- Find something/someone you are interested in and enter their Twitter Handle into the dialogue box: “Search for tweets that match this query”

- LATISM_NYC

- GCDigitalFellows

- Play around with the choices depending on how much data you want. (Less is more, this is SLOW)

- The Twitter API changes all the time with how much data you can collect over how much time. Start small until you know what you need.

- This gives you a feed of how people are related, tweets they mention each other in, the tweet, and the follows/following relationships. These form the basis of your user network.

- Can make graphs using the panel next to Import/Export. This gives some simple analysis and shows the relationships of users or relationships of topics.

- To use this with Gephi, export the file as a GraphML (only available for Pro)

TUTORIAL: TWITTER TOPICS

TAGS, GOOGLESHEETS

This might be the most common type of analysis for academics, and is what most people think of when talking about “social media scraping”. The information on these site is not static, and is constantly being updated, so scraping doesn’t work the same way as for a website. These sites also want to know who is doing what, so they require an API (Automated Programming Interface). This basically is a key so that your program can interact with their program. Hawksey created a beautiful spreadsheet to do this for you called TAGS. We are going to use that here. The same principles apply to scrape data from YouTube or Facebook, but these all require some programming in Python or R.

Collecting data about trending topics with TAGS. TAGS lets you collect Twitter data using Google Sheets.

- Open the TAGS Sheet, then MAKE A COPY

- Click Enable Custom Menu to get the TAGS menu on your toolbar

- TAGS>Set Up Twitter Access

- You will need to fill out why you are doing this, and have your Twitter account 100% set up.

- Copy/Paste the OAuth API and Secret

- Search for any term

- Go to TAGS>Run Now!

- The data appears on the Archive Tab. Be sure to use the TAGS menu to make any changes

- It should look like this.

Image from NodeXL, Credit to Marc Smith