This post may be useful to folx interested in starting a web-scraping workshop but not sure where to begin. In this post, I provide a recap of the Beginner’s Guide to Web Scraping workshop that took place earlier in the semester.

I based this workshop on Compute Canada HSS’s Web-Scraping Workshop. The workshop went over some of the core concepts of web-scraping, when it is useful, and I discussed a GUI (Graphical User Interface) tool to help get folx started on a web-scraping workshop. We then ended with a short discussion of next steps and further exploration.

As this was a workshop geared towards beginners and foundational concepts, I spent some time discussing and defining the concept of web-scraping. Web-scraping can be easily understood as a method to extract information from websites and is a way that we take non-structured data formats (e.g. long text-forms) and transform them to semi- or structured data formats (e.g. spreadsheets). While the term web-scraping tends to conjure up images of complicated codes and scripts, it can be as straightforward as just copying-and-pasting from a webpage to a spreadsheet. In fact, this manual method can actually be a lot quicker and less error-prone if you are looking to grab information from just a couple of web pages.

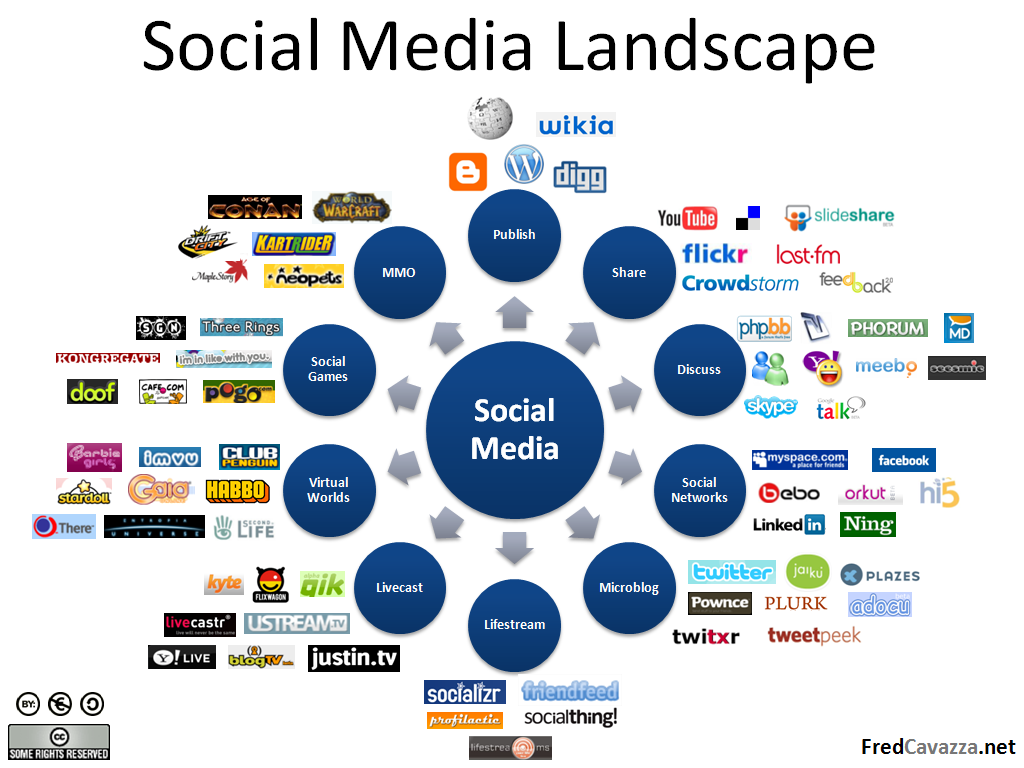

With that said, when might it be worthwhile to automate the process of web-scraping? Usually, it’s when you are looking to scrape a large amount of information, and/or when you are looking to scrape a page over specific periods of time. Automating the process can help you obtain information in a systematic manner, and can require less effort than manually navigating to a page and copying-and-pasting once you’ve set up a method of retrieval. However, before you begin the process of automated scraping, it is also worthwhile to consider if scraping is your best option. Sometimes websites and platforms offer an option to access data through their APIs (Application Programming Interface), such as Twitter, or even options to download datasets directly, such as in Our World in Data. If these options exist, it is often easier than scraping as the information is already stored in semi-structured or structured data formats, and will require less cleaning than data that you scrape on your own.

If you are sure that web-scraping is your best option, you will also have to consider if you can scrape everything and anything you want. Intense scraping places high demands on servers, and can break sites. If you are looking at websites made by small, grassroots organizations, chances are, they do not have a lot of server space, and high demands (e.g. constant request and access to their website) can cause their website to break. In addition, websites often have a robots.txt file (e.g. Internet Archive’s robot.txt file) that suggests how and what kind of information you can access. While this remains mostly advisory and there is no real legal means in enforcing this request, it does provide a guide to why you might want to access the information on the website. Lastly, there may also be certain terms of use with the information you’ve obtained. Certain sites may allow you to scrape the information but not distribute it. You may need to browse the privacy and terms of condition policies of a site to get a sense of what you can do with the data you obtain.

When you are ready to start on your web-scraping project, it is also helpful to understand what makes up a webpage, even if you are using GUI tools. This will help you identify where the webpage you are visiting stores the information you want and how you may be able to extract the information. Simply put, webpages are made up of HTML (HyperText Markup Language), and if you are new to it, do check out our introductory workshop that will help you orient around the terms and structures that make up a page.

During the workshop, we discussed a Google Chrome browser plug-in Data Miner’s Data Scraper, and practiced using it on a couple of websites such as IMDb and the BBC front page. The benefits of using a browser plug-in tool like Data Scraper is the convenience it provides in helping us get right to the process of extracting information on the website. There are default recipes built into the plug-in as well as user-contributed recipes which can help speed up the process for sites that are frequently scrapped such as Google Scholar. In addition, the option to build custom recipes with a clear step-by-step process makes it a beginner-friendly tool. The recipe making tool guides us through the process of identifying the rows and columns that make up our extracted information, helping us in the process of transforming unstructured data to a structured format.

While a browser plug-in tool can be really helpful to get us started and, perhaps to even finish a simple project, we start to run into some of the limitations of using such tools. For example, certain features on Data Scraper are limited to the paid version and there is a limitation to the volume of data we can scrape. In addition, using GUI tools makes it harder to replicate the steps and the process is not always transparent. We are not always sure how a tool extracted the data, or may find it hard to explain to our team members the steps we took to create our recipe for scraping. Creating our own web-scraping scripts can help us address some of these concerns. Hence, when we feel ready to move beyond the GUI interface and browser plug-ins, we might consider learning a new programming language to help us go further with our web-scraping projects.

Common programming languages, such as Python and R, have a robust online community and have packages (bs4 and rvest, respectively) that support web-scraping projects. When you feel ready to traverse beyond the GUI plug-in, you can check out some of the guides we have written or found to get you started:

If you encounter any issues on the way, or would like to discuss your project further, you can always reach out and join us through our Python User Group (PUG) or R User Group (RUG), or set up a 1:1 consultation with a digital fellow.

I hope this recap is useful when you are starting your project, and best of luck!